A few weeks ago, I visited the Privacy and Freedom conference at the Center for Interdisciplinary Research (ZiF), Bielefeld University, which was very informative and insightful. It was organized by the project Transformation of Privacy (funded by Volkswagen Foundation). Like our new ABIDA project, it combines very different disciplinary perspectives including law, political science, communication science and informatics to tackle the challenges resulting from ICT – here with a particular focus on privacy and freedom.

The ambivalence of privacy

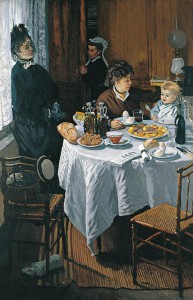

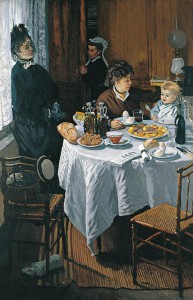

The 2-day conference brought together scholars with diverse backgrounds, giving a broad but also detailed and differentiated look into the relation between privacy and freedom today and in the past. Although not always easy to follow, accounts from the political theory angle (delivered by Dorota Mokrosinska, Andrew Roberts, Sandra Seubert, the keynote speaker Annabelle Lever and not to forget the critical audience of around 50 people) gave very valuable contributions for a deeper understanding of the interplay of various important factors to consider when we think about these terms. Privacy is relative, historically but also socially. Towards the end of the conference, Rüdiger Grimm referred to a powerful example to illustrate this: Claude Monet’s painting “Le dejeuner.”

Claude Monet´s painting „Le déjeuner“: Too private for its time?

Today, it is difficult to imagine that this scene could be controversial in any regard. But the jury of the Salon in Paris rejected it because such “intimate” moments were regarded as too private for a public audience. This seems hard to believe in an age in which people share every smallest moment of their lives with a (potential) mass audience via social media.

Privacy is also relative in a social sense, differing from context to context as Dorota Mokrosinska pointed out: We have no problem to reveal our naked bodies to our doctors but wouldn´t show them our bank account. At the same time, bankers may know all details about our financial life while most of us will be rather reluctant to strip naked in front of them. This is exactly the challenge we are facing in the context of Big Data, as the new methods and technologies collect, combine and correlate evermore types of data that used to be either not existent or not connected.

Privacy and power

However, it is not enough to conceptualize privacy as individual secrets that ought to be protected from others. While in some contexts privacy may be an enabler of freedom by providing personal autonomy it can be a tool for repression in others. For example, women have been systematically kept away from public life by tying them to the privacy of their households. Therefore, we can never talk about privacy without talking about equality as Annabelle Lever pointed out in her passionate keynote. Privacy comes with costs and benefits but these are very unequally distributed, Lever explained. Quite clearly, this means we need to consider a classic sociological topic if we want to understand privacy and its implications: power structures. Sandra Seubert also referred to this in her talk: Drawing on Adorno and Horkheimer´s reflections on the cultural industry she described how users of popular web platforms contribute to stabilizing power structures. Since these platforms have penetrated more or less all areas of life, resistance is almost impossible and the individual is practically forced to co-produce the power of external forces. Nevertheless, I agree with a comment from the audience, reminding us that the power structures in the online context do not just reproduce “old” power structures as they also give new power to the individual (e.g. when individuals threaten others´ privacy by publishing confidential material about them).

Empirical perspectives on privacy

A strength of the conference was that the audience wasn´t left with these important but also partly highly abstract theoretical reflections on privacy. Sessions on the communication and information science perspectives (and beyond) were helpful here to contextualize these thoughts with empirical, experimental and technical insights. The various examples showcased how our data-driven world challenges privacy, leading repeatedly to the normative question of what we should do about it. Fortunately, this question was asked in light of the knowledge resulting from the presented research projects. This prevented to remain in a purely imaginative sphere of wishful thinking. As Laura Brandimarte pointed out with regard to her research perspective of behavioral economics: We need to understand how people actually make decisions – not just how they should decide. She and her colleagues conducted a number of experiments on the perception of privacy threats, e.g. by giving people varying options for controlling their privacy in a web survey. Finding that more options do not necessarily lead to more privacy-aware actions the researchers conclude:

“The paradoxical policy implication is that Web 2.0 applications, by giving greater freedom and power to reveal and publish personal information, may lower the concerns that people have regarding control over access and usage of that information. “ (Brandimarte et al. 2013, quoted from pre-print)

Anyway, most people don´t seem to be very concerned about their data. Sven Jöckel studied smartphone users´ heuristics for selecting apps. The majority of the participants (62 %) would spend only around 2 seconds with reading the app permission as he observed in his small case study. Bigger factors seem to be branding effects or recommendations by friends. This rational became clear when Jöckel referred to a user who appeared very privacy-concerned at first, since he refused to install an app due to its extensive demand for permission rights. Yet, when he selected another app he did not pay any attention to the given rights because he recognized the brand which he obviously trusted.

Given such observations, it becomes clear that raising users´ awareness about the terms they agree on when signing up for a service is an important goal. Stefan Katzenbeisser also stressed missing awareness as one of the key obstacles for privacy protection tools in his talk. From his informatics point of view he strongly and convincingly criticized suggestions made by some policy-makers to deliberately weaken data protection to enable surveillance, citing PGP-developer Phil Zimmermann´s (1999) famous sentence: “If privacy is outlawed, only outlaws will have privacy”.

So what can we do?

So what can we do to enhance users´ awareness of privacy threats? I always thought that making terms of use more accessible, for example by providing easily understandable icons could be a good first step to enhance what Simone Fischer-Hübner called “ex ante privacy” in her presentation.

Privacy icons suggested by the former vice president of the European Parliament Alexander Alvaro

There are several initiatives in this direction (see for example this helpful blog post by Ann Wuyts). However, developing icons that are truly telling is rather challenging. As Fischer-Hübner and her colleagues pointed out in an ENISA report, many icons “(…) do not seem to be very intuitive and not easily and unmistakably recognizable by their symbolic depictions” (Tschofenig et al. 2013: 26). Just take a look at the icons suggested by the former vice president of the European Parliament, Alexander Alvaro above and the problem becomes evident. Thus, tools for enhancing “ex post privacy” (e.g. by giving insights into how our data is processed) are equally important while in both cases user friendliness is crucial, as Fischer-Hübner argued.

Privacy-veteran Roger Clarke also referred to usability as one of the factors for why the various privacy-enhancing technologies (PETs) he introduced in his talks haven´t been adopted more widely. Yet, he sees such technical solutions as an appropriate answer to what he has coined “Privacy-Invasive Technologies”. After many years of experience as a privacy activist, Clarke has apparently lost faith in institutional solutions in favor for individualistic approaches:

“Unfortunately, the winners are generally the powerful, and the powerful are almost never the public and almost always large corporations, large government agencies, and organised crime. In the information era, the maintenance of freedom and privacy is utterly dependent on individuals understanding, implementing and applying technology in order to protect free society against powerful institutions.” (Clarke 2015)

By the way, I recommend to check out Clarke´s incredibly comprehensive website which has charmingly withstood all web design trends since its establishment in 1994. You can also find his related paper and his slides (PDF) there.

Legal challenges

In the last regular session the various challenges connected to privacy and freedom were addressed from the legal perspective. This was a much needed point of view, as legislators struggle to keep up with the rapidly growing privacy threats in the context of the Internet. At the same time, the state plays an ambivalent role, as a protector but also violator of citizen rights. From Snowden we learned that many conspiracy theories on surveillance are indeed not conspiracy theories, as Philipp Richter reminded the audience. Our privacy and freedom is threatened through the digital, so the laws have to become digital themselves. “If code is law, law must be code” Richter argued in reference to Lawrence Lessig´s famous quote. Right now, laws are usually not directed to specific technologies. On the one hand, this allows them to stay valid even for future technologies. On the other hand, this means decisions have to be made more and more by the judiciary, leading to legal uncertainty and reduced governmental power, as Richter convincingly stated. Johannes Eichenhofer as well as Gerrit Hornung gave an impression of the various existing laws and institutions relevant for “e-privacy” – reaching from Germany´s constitutional court, the EU and international law to the service providers who were portrayed as (potential) violators but also guards of e-privacy. Unlike the other sessions, this one was held in German. Given the rather special language of the German legal system, this is understandable. The organizers´ solution were translators who simultaneously delivered the talks and discussions in English. I have the utmost respect for the people facing this incredibly difficult task. However, I doubt that it was a good solution to transfer this challenge from the speakers to the translators who then had to deal with it in real-time.

Altogether, the “Privacy and Freedom” conference gave an encompassing yet profound and thought-provoking overview of the complex and diverse issues around these terms. The interdisciplinary approach was not only necessary, it even worked in a productive way. For me personally, the conference served almost as an introduction to some of the important topics we will have to faith in our project ABIDA – Assessing Big Data. As a policy-advising project, we will also be forced to tackle a question raised by Stefan Dreier at the closing session of the conference which sums up the almost dilemma-like situation policy-makers have to face: Where do we draw the thin line between autonomy-enabling, paternalistic and freedom-limiting governance?

References

Brandimarte, L., Acquisti A. and Loewenstein, G. (2013): Misplaced Confidences: Privacy and the Control Paradox, Social Psychological and Personality Science 4 (3), pp. 340-347.

Clarke, R. (2015): Freedom and Privacy: Positive and Negative Effects of Mobile and Internet Applications. Notes for the Interdisciplinary Conference on ‘Privacy and Freedom’, 4-5 May 2015 Bielefeld University.

http://www.rogerclarke.com/DV/Biel15.html

Tschofenig, H., et al. (2013): On the security, privacy and usability of online seals. An overview, European Union Agency for Network and Information Security (ENISA). https://www.enisa.europa.eu/activities/identity-and-trust/library/deliverables/on-the-security-privacy-and-usability-of-online-seals/

Zimmermann, Philip (1999): Why I Wrote PGP. https://www.philzimmermann.com/EN/essays/WhyIWrotePGP.html